2023 Post-doctoral Non-clinical Fellowship

A Foundational Model for Neurological Language

Neurology’s reliance on unstructured text as its primary mode of communication reflects its essential complexity. Its resistance to structured description proceeds from the extraordinary richness of neurological phenomena: not only those arising in the direct clinical encounter, but also in the neuro-anatomy, physiology, and pathology various investigations reveal. This places those who wish to model neurological phenomena in a Catch 22: to craft high-fidelity structured descriptions from clinical records requires large-scale, fully-inclusive data commensurate with the complexity of the domain, yet to render rich data analysable at scale, inevitably via automated means, requires high-fidelity structured descriptions in the first place.

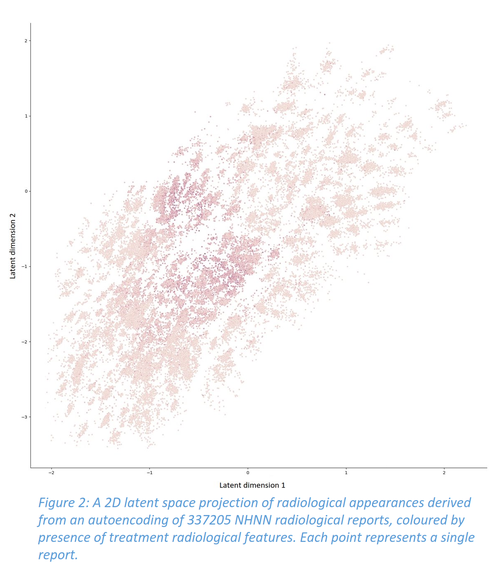

I propose to create a comprehensive foundational model of neurological language by engineering locally-deployable, highly-expressive, general-purpose, large language models. Our model, Neurobase, will acquire the ability to interpret complex neurological language by combining great logico-grammatical proficiency inherited from the language model, with neurology-specific expertise acquired from large-scale in- and out-patient unstructured text records. Like its parent LLM, Neurobase will be flexibly instructible in natural language, permitting non-technical users to perform tasks. One could extract specific phenotypic features from a set of clinic letters, simply by asking a question, all the while preserving privacy and ensuring alignment with the task. In addition, I shall use the model’s internal, latent representations of neurological descriptions to derive comprehensive, organised deep phenotypes of neurological patients, automatically capturing complex interactions between individual features. Such models have potential utility beyond extracting the features themselves, and they also provide useful tools that we aim to host within the hospital for use in an active and not just academic setting.